Key Takeaways

| Point | Description |

|---|---|

| Models Introduced | Anthropic announced three new models: Haiku (smallest), Sonnet (medium), and Opus (largest) |

| Benchmarks | Opus claims to outperform GPT-4 on various benchmarks, including math, reasoning, and multilingual tasks |

| Capabilities | All three models are vision-language models, able to process images and text |

| Speed | Sonnet is 2x faster than Claude II, and Haiku is the fastest of the three |

| Cost | Haiku is the most cost-effective, with a 200k context window and low token pricing |

| Availability | Sonnet and Opus are available on Anthropic’s API and partners like AWS and Google Cloud |

An Overview of Anthropic’s Claude 3 Models

Anthropic recently unveiled a new family of AI models called Claude 3, comprising three distinct models: Haiku, Sonnet, and Opus. These models represent varying sizes and capabilities, with Opus being the largest and most powerful of the trio.

Comparing All Claude Models: Benchmarks and Performance

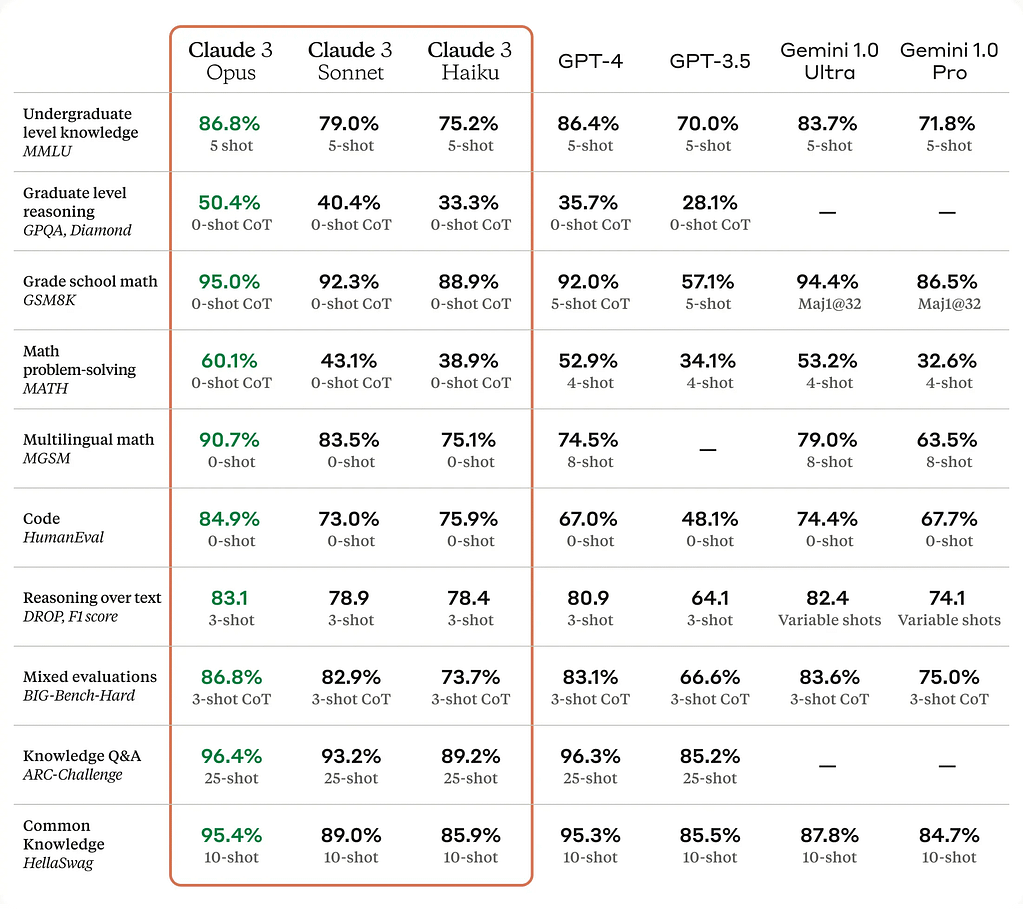

One of the key highlights of the Claude 3 release is the impressive performance of the Opus model on various benchmarks. According to Anthropic, Opus outperforms OpenAI‘s GPT-4 on tasks such as grade school math, math problem-solving, and graduate-level reasoning.

Benchmark Scores

| Benchmark | Opus | GPT-4 |

|---|---|---|

| Grade School Math (Zero-shot) | 95% | 92% (5-shot) |

| Math Problem Solving (Zero-shot) | Higher than GPT-4 | – |

| Graduate-level Reasoning (GPQA) | Higher than GPT-4 | – |

However, it’s worth noting that for some benchmarks, Anthropic acknowledges that certain versions of GPT-4 may perform better than their reported scores.

Comparing All Claude Models: Multimodal Capabilities

One standout feature of the Claude 3 models is their ability to process both text and images, making them vision-language models (VLMs). This multimodal capability allows the models to reason over various types of data, including charts, presentations, PDFs, and images.

Multimodal Examples

| Input | Example |

|---|---|

| Text | “What is the capital of France?” |

| Image | [Insert image of the Eiffel Tower] |

| [Insert excerpt from a financial report] |

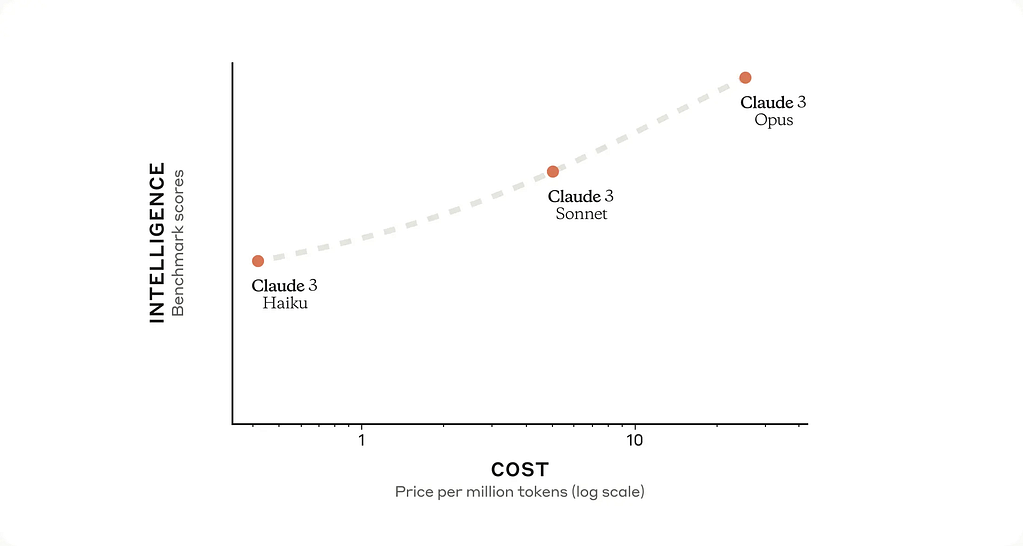

Comparing All Claude Models: Speed and Cost

Anthropic claims that the Claude 3 models offer improved speed compared to their predecessors. Specifically, Sonnet is said to be twice as fast as Claude II, while Haiku is the fastest of the three.

In terms of cost, the Haiku model stands out as the most economical option. With a 200k context window, multimodal capabilities, and token pricing lower than GPT-3.5, Haiku could be the star of the show for many use cases.

Token Pricing

Here’s the model details in a table format:

| Model | Claude 3 Opus | Claude 3 Sonnet | Claude 3 Haiku |

|---|---|---|---|

| Description | The most advanced and intelligent model, excelling at highly complex tasks with human-like understanding. | Balances intelligence and speed, ideal for enterprise workloads requiring strong performance and endurance. | The fastest and most compact model, designed for near-instant responsiveness and seamless AI experiences. |

| Cost (Input/Output per million tokens) | $15 / $75 | $3 / $15 | $0.25 / $1.25 |

| Context Window | 200K | 200K | 200K |

| Potential Uses | – Task automation across APIs, databases, and coding – R&D: Research review, brainstorming, hypothesis generation – Strategy: Advanced analysis of charts, financials, forecasting | – Data processing and knowledge retrieval at scale – Sales: Product recommendations, forecasting, targeted marketing – Time-saving tasks: Code generation, quality control, text extraction from images | – Customer interactions: Quick support, translations – Content moderation: Identifying risky behavior or requests – Cost-saving tasks: Logistics optimization, inventory management, knowledge extraction |

| Differentiator | Highest intelligence, surpassing other commercially available models. | More affordable than peers with similar intelligence, better for large-scale deployments. | Smarter, faster, and more cost-effective than models in its category. |

Comparing All Claude Models: Availability and Access

Anthropic has made the Sonnet and Opus models available on their API immediately after the announcement. Furthermore, the Sonnet model is already accessible on Amazon Bedrock and in private preview on Google’s Vertex AI, with Opus and Haiku expected to follow soon.

Claude 3 Opus and Sonnet are readily available for use through our generally available API, allowing developers to sign up and start utilizing these models immediately. Claude 3 Haiku will be made available soon.

Sonnet powers the free experience on claude.ai, while Opus is accessible to Claude Pro subscribers.

Moreover, Sonnet is currently available through Amazon Bedrock and in private preview on Google Cloud’s Vertex AI Model Garden. Opus and Haiku are expected to be added to both platforms in the near future.

Want to learn more about LLMs? Check out our comprehensive guide on how these Neural Networks Work.

Continuous Improvement: Smarter, Faster, Safer

We believe that the intelligence of AI models has yet to reach its limits, and we plan to release frequent updates to the Claude 3 model family over the next few months. Additionally, we’re excited to see a series of features that will enhance Claude models’ capabilities, particularly for enterprise use cases and large-scale deployments. These new features will include Tool Use (function calling), interactive coding (REPL), and more advanced agentic capabilities.

As we push the boundaries of AI capabilities, They remain equally committed to ensuring that our safety guardrails keep pace with these leaps in performance. Their hypothesis is that being at the forefront of AI development is the most effective way to steer its trajectory towards positive societal outcomes.

Conclusion

Anthropic’s Claude 3 models represent a significant advancement in the field of AI, offering impressive performance, multimodal capabilities, and cost-effective options. While the Opus model shines in benchmarks, the Haiku model could be the true star, combining affordability with speed and versatility. As these models become more widely available, it will be interesting to see how developers and researchers leverage their capabilities in various applications.

Stay up-to-date with the latest trends and developments in AI by subscribing to our newsletter here.